Artificial Intelligence (AI) has the potential to support and enhance the development of human capabilities across all areas of ECU's work, consistent with our purpose to transform lives and enrich society.

It’s why we have developed this framework.

The release of ChatGPT-3 in November 2022 marked a tipping point for higher education in our engagement with AI. While AI had already been in various stages of exploration, adoption and use across the sector, the capabilities of Generative AI have prompted institutions to review the extent to which AI more generally has been defined and appropriately accounted for in policies, procedures and practices.

AI presents enormous opportunities and significant risks to individuals, organisations, and society. ECU recognises this and commits through this framework to engaging both constructively and critically with AI to advance our purpose, strategic vision, and values.

More about this framework

The purpose of this framework is to empower and enable staff and students to productively and ethically use AI, in line with ECU’s vision to lead the sector in the educational experience, research with impact, and in positive contributions to industry and communities.

The framework is designed to support judgements, guide institutional decision making, and as far as practicable, leverage existing policies and processes to identify and manage risk, and enhance human capability.

It is based on AI research and policy from a range of leading bodies and informed by best practice in the development of effective organisational ethics frameworks.

Note: The framework was developed by Professor Rowena Harper, Deputy Vice-Chancellor (Education), and Professor Edward Wray-Bliss, The Stan Perron Professorial Chair in Business Ethics, in consultation with the AI Steering Committee and Working Groups.

Elements of the framework

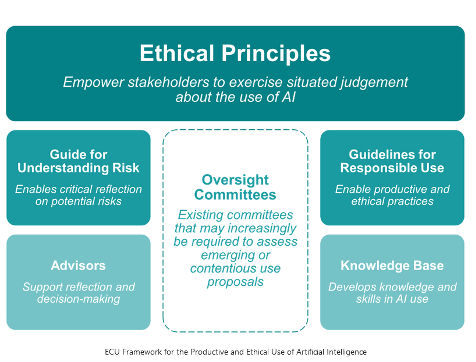

The framework is comprised of six elements.

Five Ethical Principles

ECU's Framework for the Productive and Ethical Use of Artificial Intelligence has at its core a set of five Ethical Principles. These are designed to empower staff and students to make situated judgements about AI across all levels and areas of the organisation so that we can collectively navigate our ethical engagement with AI.

Benchmarking indicates that common ethical principles for the use of AI can align well with ECU’s values. Aligning with existing organisational values should give ECU’s AI Ethical Principles greater authenticity for all stakeholders.

.